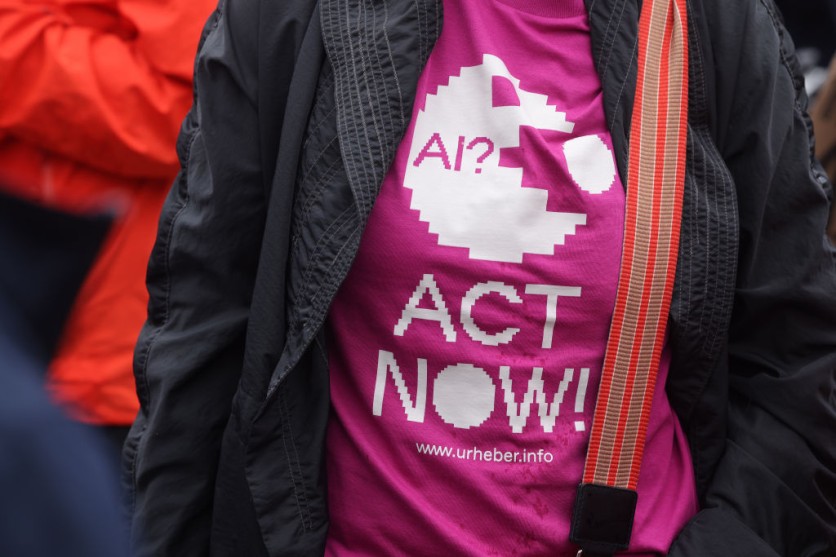

High-profile artificial intelligence (AI) researchers and specialists have urged tech corporations and governments to allocate a large amount of their research and development budgets to AI safety and ethics.

These experts have provided strategies for reducing hazards associated with AI in a report that was published in advance of the worldwide AI Safety Summit in London.

Three Turing Award recipients, a Nobel laureate, and over a dozen leading AI experts authored the paper, emphasizing the necessity for proactive AI risk mitigation and ethical AI development.

One of the suggestions is for governments to establish legal responsibility for injuries brought on by sophisticated AI systems that can be predictably avoided.

Laws Regulating AI Must Be Established

There are currently no comprehensive laws addressing AI safety, and the European Union's planned legislation is still delayed as a result of continuing legislative discussions, according to WION.

Modern AI models have grown to be so powerful, according to renowned AI specialist Yoshua Bengio, that democratic monitoring is necessary to guarantee ethical growth. He stressed the need to make investments in AI safety owing to the technology's fast advancement, which has surpassed safety precautions.

"It needs to happen fast because AI is progressing much faster than the precautions taken," Bengio said, as quoted by VOA.

Other notable AI experts who have contributed to the study include Yuval Noah Harari, Geoffrey Hinton, Andrew Yao, Daniel Kahneman, and Dawn Song.

As AI technology develops, worries about its safety and moral application have increased, with notable people like tech tycoon Elon Musk calling for a halt to the creation of powerful AI systems.

However, other businesses have advocated against strict rules, pointing to the high costs of compliance and the disproportionate liability risks.

British computer scientist Stuart Russell questioned such claims, pointing out that numerous businesses are subject to stricter laws than AI firms are, which emphasizes the significance of ethical AI development.

Some Serious AI Ethics Issues

Concerns regarding the possible hazards connected with AI technology, including data privacy, security, ethical principles, and workforce consequences, have been raised due to the absence of comprehensive AI safety laws. Specifically, generative AI raises ethical questions about content production, transparency, and hazardous materials.

According to a TechTarget article, autonomous content creation is one capability of AI systems, especially generative AI, that significantly increases productivity. However, this may also produce potentially dangerous information, whether on purpose or not. Technology experts advise employing generative AI to supplement human processes and making sure that content complies with moral guidelines and brand values to overcome this problem.

Another issue is copyright and potential legal risk. It may be difficult to determine where the created material came from since generative AI techniques often train on enormous datasets from several sources. When businesses depend on artificial intelligence-driven content or products, there are hazards to copyright and intellectual property. Hence, experts urge corporations to evaluate AI model outputs until intellectual property and copyright laws are clear.

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.