Generative artificial intelligence (AI) is currently dominating the tech scene. But US-based GPU manufacturer Nvidia wants to add "more dimensions" to this budding field.

Hence, the tech giant unveiled Magic3D - a high-resolution generative AI that creates 3D models from any given text prompts.

Text-to-3D Content Creation

Generative AI is an overarching label for any AI software that generates new images, videos, texts, audio, and even codes. Common examples include AI art generators such as Stable Diffusion, Midjourney, and many more.

Analysts forecast this growing field will spur even further, becoming a huge $110 billion market by 2030.

Nvidia appears to jump on this market after revealing its Magic3D tech. The company said that it could generate high-quality 3D textured mesh models based on input text prompts.

It also employs a coarse-to-fine process that leverages both low-and high-resolution diffusion priors to understand and replicate the 3D representation of the intended target content.

Simply put, if you want to have any type of object, say a black dress, Magic3D will provide you with a 3D mesh model of your text prompt.

It can even produce 3D models out of funky prompts, such as a car made out of sushi, a peacock on a surfboard, and even more specific and detailed prompts, such as Michael Angelo-style statue of an astronaut or a silver candelabra sitting on a red velvet tablecloth, with an only candle lit.

You can see the 3D mesh models of these prompts here.

It must be noted that these examples were drawn from the prompts provided by Nvidia on its website. It shows that the new AI tech can generate models out of highly specific prompts.

Furthermore, the company claims that Magic3D synthesized 3D content with 8x higher resolution supervision and 2x faster than DreamFusion, a text-to-3D tech that uses 2D diffusion.

Magic3D's Features

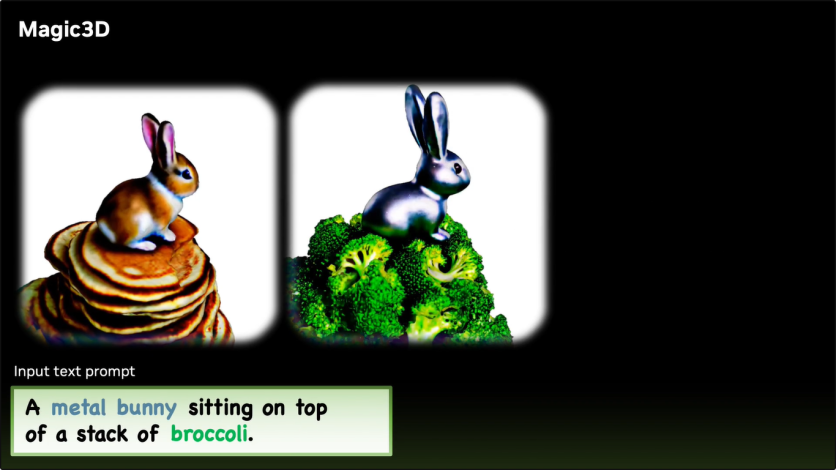

Nvidia also said they could edit an input text prompt to modify an already-generated 3D model. For example, the team behind the new tech demonstrated this edit feature by changing the mesh model of a baby bunny sitting on top of a stack of pancakes to a metal bunny sitting on top of a stack of broccoli.

Magic3D can also fine-tune the diffusion models with the help of DreamBooth and optimize the 3D models.

"The identity of the subject can be well-preserved in the 3D models," the team said.

The team uses a two-stage coarse-to-fine optimization framework to ensure fast and high-quality text-to-3D content creation.

A low-resolution diffusion prior is used in the first stage to produce a coarse model, which is then accelerated using a hash grid and sparse acceleration structure.

In the second stage, a textured mesh model that is initialized from the coarse neural representation is employed to enable optimization using a high-resolution latent diffusion model in conjunction with a differentiable renderer.

Related Article : This New AI Tool Could Accelerate the Development of Gene-Editing on a Large-Scale

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.