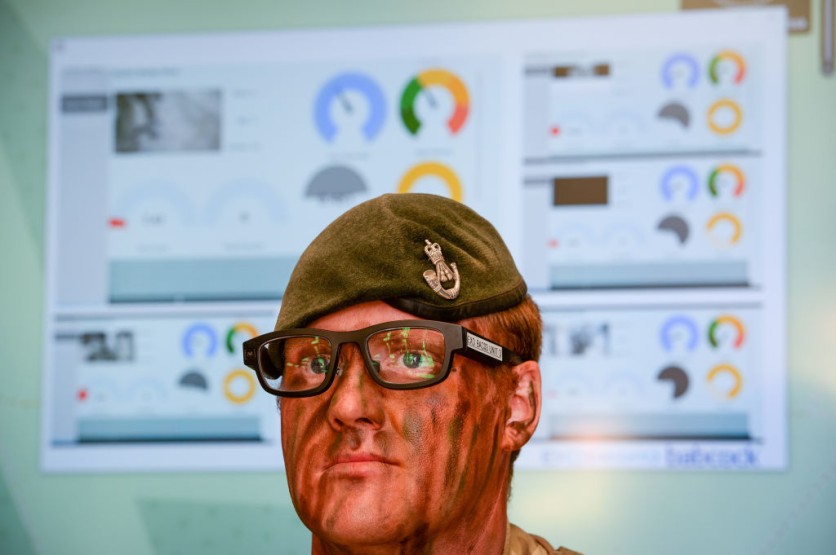

Cornell University's Smart Computer Interfaces for Future Interactions (SciFi) Lab has developed an AI-powered eyeglasses interface that can read the wearer's silent speech.

Called EchoSpeech, the eyewear recognizes up to 31 unvocalized commands based on lip and mouth movements, and it uses acoustic sensing and artificial intelligence to do so.

AI Reads Silent Voice Commands

The wearable interface requires just a few minutes of user training data before it can detect and activate commands on a smartphone. It is ideal for people who cannot vocalize sound, enabling them to use silent speech technology as an excellent input for a voice synthesizer.

Using design tools like CAD, the silent speech interface can also be used with a stylus, almost eliminating the need for a keyboard and mouse.

Using technologies like EchoSpeech makes it possible to communicate with others by smartphone in noisy settings.

The wearable technology has speakers and a pair of microphones no larger than pencil erasers.

The glasses recognize mouth movements, transmit and receive sound waves across the face, and analyze them in real-time with an accuracy of roughly 95% using a deep learning algorithm.

Audio data can be communicated to a smartphone through Bluetooth in real time and uses less bandwidth than image or video data because it is significantly smaller.

Additionally, the data is processed locally on the smartphone rather than sent to the cloud, ensuring privacy-sensitive information never leaves the user's hands.

Read Also : 2024 Paris Olympics: French Lawmakers to Vote on Controversial Bill Legalizing AI-Powered Surveillance

Next Step: Commercialization

The team is attempting to commercialize the technology underlying EchoSpeech with gap funding from Ignite: Cornell Research Lab to Market.

The SciFi Lab has created several wearable gadgets that use tiny video cameras and machine learning to track body, hands, and facial motions.

For tracking face and body movements, the lab has recently switched away from cameras and toward acoustic detection, citing better battery life, greater security and privacy, and smaller gear.

EchoSpeech is based on the lab's wearable earbud that records facial movements dubbed EarIO, which similarly uses acoustic sensing. In their upcoming study, researchers at SciFi Lab are investigating smart-glass applications to monitor facial, eye, and upper body motions.

The lab believes that glass will be a crucial personal computer platform for comprehending human behavior in natural environments. Hence, the EchoSpeech came to be.

Related Article : Meta Quest Headsets to Feature Price Reductions, is it Preparing for Apple AR/VR Headset Arrival?

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.