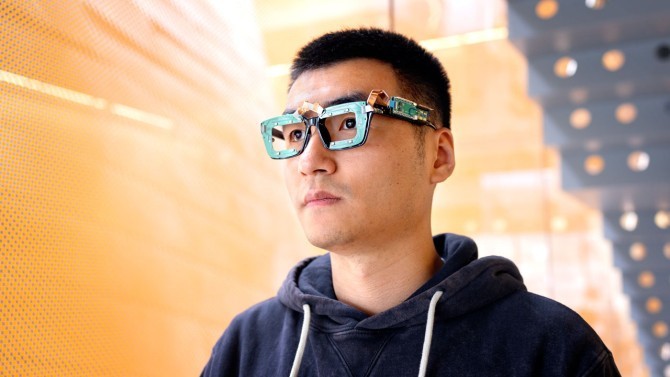

Cornell University researchers have unveiled innovative technologies designed to track gaze and facial expressions using sonar-like sensing.

These advancements, suitable for integration into commercial smartglasses or virtual reality (VR) and augmented reality (AR) headsets, offer a distinct advantage over camera-based systems by consuming significantly less power, according to the research team.

Introducing GazeTrak and EyeEcho

The first of these technologies, GazeTrak, relies on acoustic signals rather than visual cues. By employing speakers and microphones mounted on eyeglass frames, GazeTrak captures and interprets soundwaves reflected off the face and eyes, enabling continuous inference of the user's gaze direction.

This novel approach not only enhances battery efficiency but also offers a more discreet and comfortable user experience, according to the team.

The second technology, EyeEcho, is touted as the first eyeglass-based system capable of accurately detecting and replicating facial expressions in real-time.

Similar to GazeTrak, EyeEcho utilizes speakers and microphones to capture subtle skin movements associated with facial expressions. Through advanced AI algorithms, these signals are analyzed to create a dynamic avatar that mirrors the user's emotions and expressions.

One of the key advantages of these technologies highlighted by the team is their minimal power consumption, allowing for extended usage on smartglasses or VR headsets without draining the battery. This feature makes them practical for everyday use, whether for immersive VR experiences or hands-free video calls in various environments.

Read Also : Cornell's New Sonar Glasses to Deliver Silent-Speech Recognition Using Body Movements with 95% Accuracy

Potential Applications of GazeTrak and EyeEcho

Moreover, the potential applications of GazeTrak and EyeEcho extend beyond entertainment and communication. GazeTrak, for instance, could assist individuals with low vision by providing screen reading capabilities, thereby enhancing accessibility to digital content.

Additionally, both technologies hold promise for aiding in the diagnosis and monitoring of neurodegenerative diseases such as Alzheimer's and Parkinson's. By tracking changes in gaze patterns and facial expressions, these tools could offer valuable insights into the progression of these conditions from the comfort of a patient's home.

"It's small, it's cheap and super low-powered, so you can wear it on smartglasses every day-it won't kill your battery," Cheng Zhang said in a statement.

"In a VR environment, you want to recreate detailed facial expressions and gaze movements so that you can have better interactions with other users," said Ke Li, a doctoral student who led the GazeTrak and EyeEcho development.

In upcoming conferences, such as the Annual International Conference on Mobile Computing and Networking and the Association of Computing Machinery CHI conference on Human Factors in Computing Systems, the researchers will present their findings on GazeTrak and EyeEcho, further showcasing the capabilities and potential applications of their technologies.

The findings of the team were published in arXiv.

Related Article : Solar-Powered Eyes? Australian Scientists Are Working on Implanting Tiny Solar Panels Into People's Eyeballs

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.