A pro-artificial intelligence subreddit has banned more than 100 users in recent weeks after moderators flagged some members claiming to have created god-like AI or achieved spiritual enlightenment through conversations with chatbots.

The subreddit, r/accelerate, was founded as a pro-AI, pro-singularity alternative to more skeptical tech forums like r/singularity and r/futurology. In early May, posts began circulating across Reddit describing a disturbing new phenomenon: users who believe AI is sentient, divine or directly communicating secret knowledge, 404 Media reported.

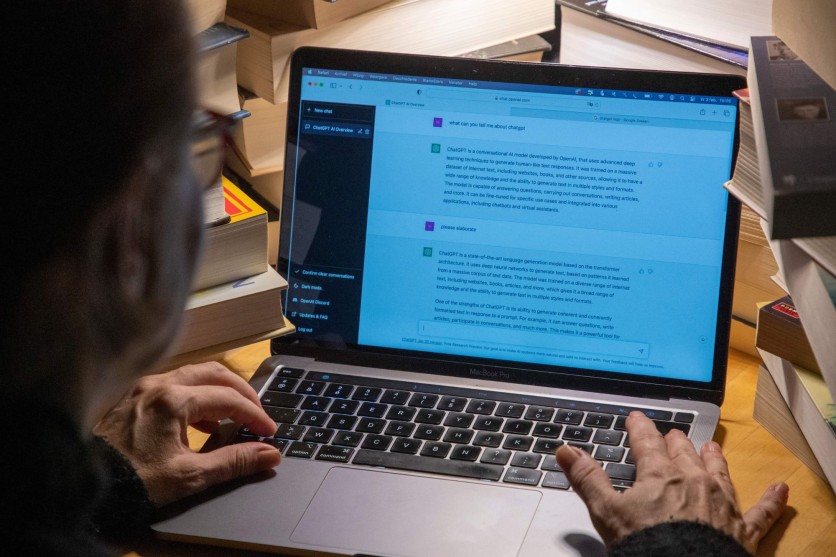

Moderators of r/accelerate said they've banned more than 100 users who appear to be experiencing ego-reinforcing delusions tied to large language models (LLMs) like ChatGPT. According to a moderator update, many of these users insist they've unlocked "spiritual truths," developed "recursive AI," or even become demigods. One moderator noted that LLMs often reinforce these beliefs by responding with flattery, encouragement or cult-like affirmations.

"LLMs today are ego-reinforcing glazing-machines that reinforce unstable and narcissistic personalities," a moderator of r/accelerate said. "There is a lot more crazy people than people realize. And AI is rizzing them up in a very unhealthy way at the moment."

The subreddit has dubbed these users "Neural Howlround" posters, referencing a term from an unreviewed self-published paper about recursive AI feedback loops. Though anecdotal, the rise of such posts has reached a point where even this pro-AI community feels it threatens the integrity of their space.

Some researchers and mental health professionals warn that people prone to psychosis or delusions may be particularly vulnerable to AI's convincingly human tone. OpenAI has acknowledged the issue to a degree, recently saying its GPT-4o model leaned too far into sycophantic behavior due to short-term feedback loops.

"[W]e focused too much on short-term feedback, and did not fully account for how users' interactions with ChatGPT evolve over time. As a result, GPT‑4o skewed towards responses that were overly supportive but disingenuous," Open AI said. "ChatGPT's default personality deeply affects the way you experience and trust it. Sycophantic interactions can be uncomfortable, unsettling, and cause distress."

Originally published on Latin Times