Science and the Springer Nature group, two of the most prestigious names in academic publishing, have announced new editorial standards in light of the emergence of artificial intelligence (AI).

In a report by The Independent, these changes exclude or severely limit the use of sophisticated AI bots like ChatGPT in writing research papers.

AI Threat

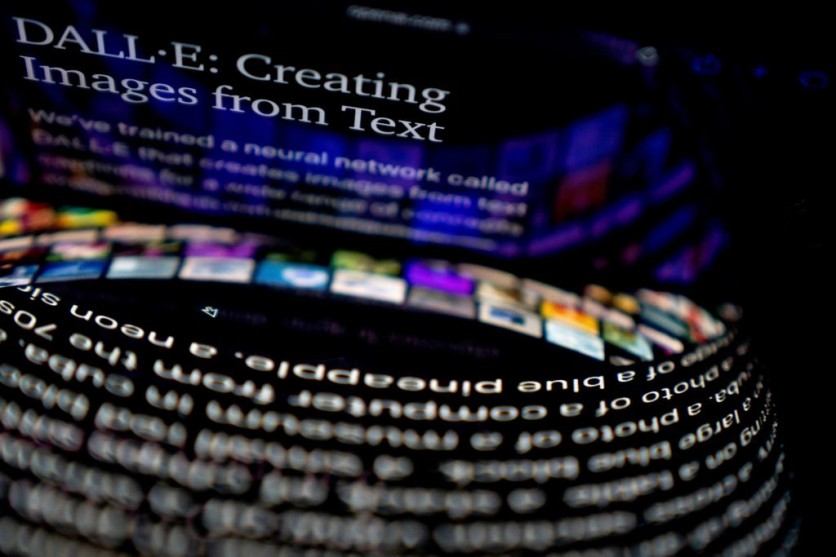

In December 2022, OpenAI's ChatGPT chatbot became well-known for its human-like responses to user questions, prompting dire warnings from industry experts about the potential disruptions the groundbreaking technology may cause.

Some experts have hailed the language model in AI as a game-changer that has the potential to displace current technologies like Google's search engine.

After the launch of the experimental chatbot, Google's management allegedly declared a "code red" for the company's search engine business.

The AI chatbot has shown the capacity to summarize studies, rationalize and address logical questions, and, most recently, pass complex tests in fields such as commerce and medicine.

Users have also noted that the AI app occasionally gives answers that seem convincing but are really completely wrong.

Experts' Worries

Science journals' editor-in-chief Holden Thorp said that the publishing house is revising its guidelines to prohibit the use of ChatGPT and any other AI tools in the articles. He said that an AI tool could not be an author.

Thorp has made it clear that violations of these principles are considered serious scientific misconduct on par with things like plagiarizing or inappropriately editing research photographs.

He said, however, that AI lawfully and knowingly creates data sets in research papers for study purposes would not be affected by the new rules.

Concern was also voiced concerns in an editorial published by Springer Nature, a publisher of almost 3,000 journals, on Tuesday, Jan. 24, which feared that users of the tool might submit AI-written content as their own or use it to conduct inadequately comprehensive literature reviews.

They referenced other research that has been written but has not yet been published, in which ChatGPT is listed as an author.

It is said that language model tools will be approved as a credited author on a research article since AI tools cannot assume responsibility like human writers.

Springer Nature emphasized that researchers who used such instruments throughout their study should include them in the paper's "methods" or "acknowledgements" sections.

In response to ChatGPT's rising profile, other publishing houses, such as Elsevier (which hosts more than 2,500 journals), have also updated their authoring standards.

Elsevier said that such AI models might be used to enhance the readability and language of the research publication but not to replace crucial duties that the authors should do, like evaluating data or drawing conclusions.

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.