In response to the rise in AI-generated political ads, two Democratic members of Congress, Sen. Amy Klobuchar of Minnesota and Rep. Yvette Clarke of New York, have written to Meta CEO Mark Zuckerberg and X CEO Linda Yaccarino, urging transparency and accountability.

The Thursday letter expresses "serious concerns" about AI-generated political advertisements on social media and requests an explanation of any risk mitigation measures. Legislators are concerned about the lack of openness surrounding this type of material and its ability to flood platforms with election-related disinformation as the 2024 US presidential elections approach.

In their letter, Klobuchar and Clarke urge social media sites to be proactive."With the 2024 elections quickly approaching, a lack of transparency about this type of content in political ads could lead to a dangerous deluge of election-related misinformation and disinformation across your platforms - where voters often turn to learn about candidates and issues," the US lawmakers wrote, as quoted by AP News.

At the same time that Klobuchar and Clarke are leading the campaign to regulate AI-generated political advertisements, pressure is being put on social media corporations.

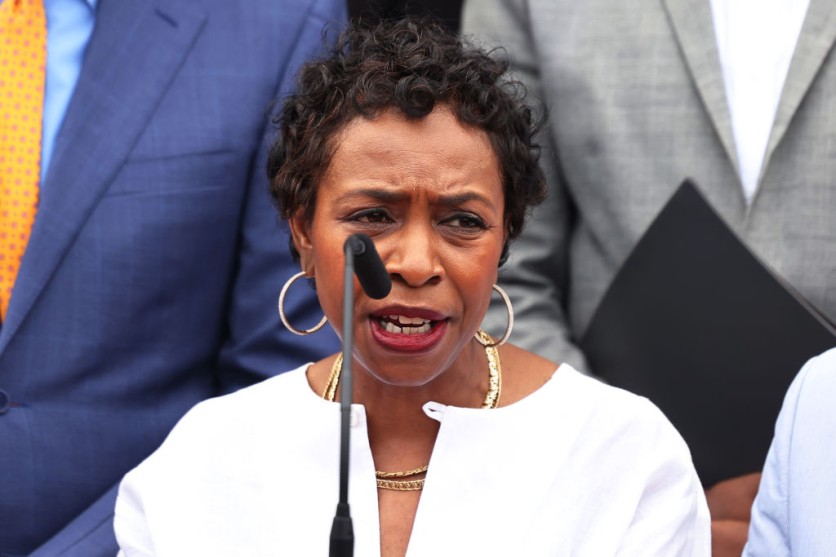

Rep. Yvette Clarke has filed the DEEPFAKES Accountability Act, a legislative initiative intended to protect people against misleading digital information, such as deepfakes, which include deepfake political statements and nonconsensual deepfake pornography.

ABC News reported that the bill provides resources to prosecutors, regulators, and victims, including the use of detection technologies, to counter the threat that illicit deepfakes pose. Although Clarke's idea, first presented in 2019, attempts to address several deepfake-related challenges, it may run into problems due to content restrictions and possible First Amendment issues. The legislation's future in the House is still up in the air.

Deepfake Threatens Democracy

Intending to get it passed by the end of the year, Klobuchar is proposing companion legislation in the US Senate. Clear disclaimers will be included in AI-generated election advertising on Google's platforms starting in mid-November, as the company has previously promised. Contrarily, Meta does not yet have a particular policy addressing AI-generated political advertisements, while having one prohibiting "faked, manipulated, or transformed" audio and images that are used for disinformation.

Klobuchar and Republican Sen. Josh Hawley of Missouri are among the co-sponsors of a bipartisan Senate measure prohibiting "materially deceptive" deepfakes about federal candidates, with exceptions for parody and satire.

Are Tech Companies Willing to Address Deepfakes Threats?

Both Republican and Democratic campaigns are already using AI-generated political advertisements to make their impact on the election scene in 2024. These advertisements, which frequently depict realistic-looking but made-up scenes, have sparked worries about their ability to mislead voters.

Klobuchar cited two examples, including Sen. Elizabeth Warren's deep-fake video and a Republican National Committee advertisement depicting a bleak future if President Joe Biden wins reelection. The legislators are pushing for rules to guarantee honesty and openness in political advertising during the forthcoming election.

The capacity of tech corporations to meet this emerging problem while upholding free speech principles is coming under greater scrutiny as the discussion over how to regulate AI-generated content in politics continues.

Bradley Tusk, a political strategist and the CEO of Tusk Venture Partners, asserts that because these platforms do not now have the incentives to do so, they may not be sufficiently ready to solve election-related deepfakes.

According to Tusk, the platforms have been unable and unwilling to stop the spread of damaging human-generated content. The spread of generative AI makes this issue progressively worse. "In fact, the incentives are virtually reversed - if someone creates a deepfake of Trump or Biden that ends up going viral, that's more engagement and eyeballs on that social media platform," he said, as quoted in a New York Post article.

Ari Cohn, an attorney with TechFreedom, stressed the First Amendment and said deepfakes have not yet misled voters. However, the Federal Election Commission has started a formal process to regulate AI-generated deepfakes in political advertisements, and a public comment period is now open through October 16.

Related Article : Meta Rolls Out New AI Tools for Facebook, Instagram Advertisers

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.