A recent analysis by the Mercator Institute for China Studies reveals that European academics are participating in "clearly problematic" artificial intelligence (AI) research projects with Chinese counterparts.

The collaborations span areas such as biometric surveillance, cybersecurity, and military applications, raising ethical concerns, as reported by Science Business.

Notably, a report by the Berlin-based institute highlights instances of collaboration between researchers at the German military's leading institute, Bundeswehr University Munich, and a People's Liberation Army university. The collaborative AI work focused on projects like drone target tracking and missile guidance, with publications continuing as late as 2019 to 2022.

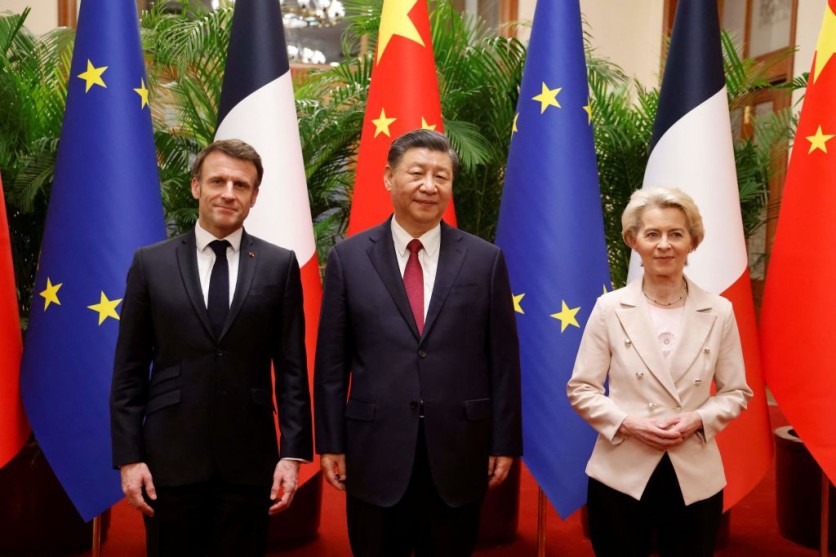

As tensions between Europe and Beijing escalate over human rights, technological competition, and potential geopolitical conflicts, there is a growing scrutiny of research links with China. The European Union and its member states have shifted collaboration in Horizon Europe away from industrially-sensitive projects toward environmentally-focused ones. However, the report indicates that several ongoing EU-funded collaborations still exist with Chinese military-linked universities.

What Does The Analysis Say?

Between 2017 and 2022, European researchers published over 16,000 AI-related papers with Chinese colleagues affiliated with institutions directly controlled by or closely tied to the Chinese military. This represents around half of all papers examined and includes collaborations with China's "Seven Sons of National Defense" universities, known for feeding personnel and research into Beijing's military.

While a significant portion of the collaborations are in medical AI, the report identifies numerous papers, published as recently as last year, presenting human rights, cybersecurity, or military risks. Examples include research on "negative mental-state monitoring," "cross-ethnicity face anti-spoofing recognition," and "integrated missile guidance."

The report also points out imbalances in the partnerships, particularly in funding sources. Of the papers disclosing funding, 80% were funded by China, with 60% from the Chinese government itself. This funding disparity raises concerns about China's potential influence on research priorities.

Additionally, the report highlights that papers tend to have more authors with Chinese affiliations than European ones, potentially indicating that Chinese researchers are more commonly initiating projects. Co-authored papers also make up a larger proportion of European research than Chinese research.

The UK stands out in the analysis, as 21% of all UK AI papers in 2021 were co-authored with Chinese partners, while papers with the UK accounted for just 3% of China's total. This raises concerns about an emerging dependency, suggesting that limiting collaboration and losing Chinese talent could come at a significant cost.

The report emphasizes the need for a recalibration of AI ties with China, urging better mapping of the AI ecosystem and stronger due diligence by Chinese partners. It also calls for awareness-building in Europe and emphasizes the importance of involving Chinese talent in discussions about safe and ethical research.

As part of these efforts, the report suggests that European countries consider implementing "proportionate" personnel screening systems to assess those engaged in research in sensitive fields. The Netherlands is already poised to introduce such checks.

US Military Unveils New AI Warfare Tool

The report is relevant in light of the most recent gathering of AI and military leaders for a private, three-day retreat, which Scale AI, a data security contractor working with OpenAI and the US Army, organized. The AI Security Summit, held in the mountains of Utah, aimed to facilitate open discussions on industry challenges.

According to FirstPost, attendees from the military and AI sectors engaged in off-the-record conversations at a luxury hotel near Park City. The summit coincided with talks between US President Biden and Chinese President Xi Jinping addressing AI regulations.

The summit combined substantive talks with leisure activities, with attendees choosing personalized cowboy hats, relishing gourmet meals, and taking part in archery lessons.

Despite some general information emerging, including panel discussions on subjects like US-China AI relations and thorough analyses of AI-enabling chips, the confidential nature of the discussions has kept much of the summit veiled in secrecy.

Meanwhile, the US Army has introduced a new AI-based tool, the Advanced Dynamic Spectrum Reconnaissance (ADSR), to enhance electronic warfare missions. According to The Defense Post, this technology enables wireless communication networks to detect and evade enemy jamming while reducing radio frequency emissions to minimize risks for friendly forces.

Recently deployed during a multinational exercise in Germany, soldiers tested the state-of-the-art system and provided training to NATO allies, emphasizing the importance of real-time spectrum awareness for identifying and responding to enemy electromagnetic signatures in electronic warfare.

Related Article : UK Government's Defense AI Center Leads Innovative Hackathon for AI-Powered Responses to Bomb Threats

ⓒ 2025 TECHTIMES.com All rights reserved. Do not reproduce without permission.