Top AI companies, including Meta, Microsoft, Amazon, OpenAI, and others, have officially signed a pledge that ensures child safety principles, as announced by the nonprofit organization Thorn.

The new guidelines, dubbed Safety by Design principles, are intended to prevent further sexual abuses against children as well as the production and dissemination of AI-generated child sexual abuse material (AIG-CSAM).

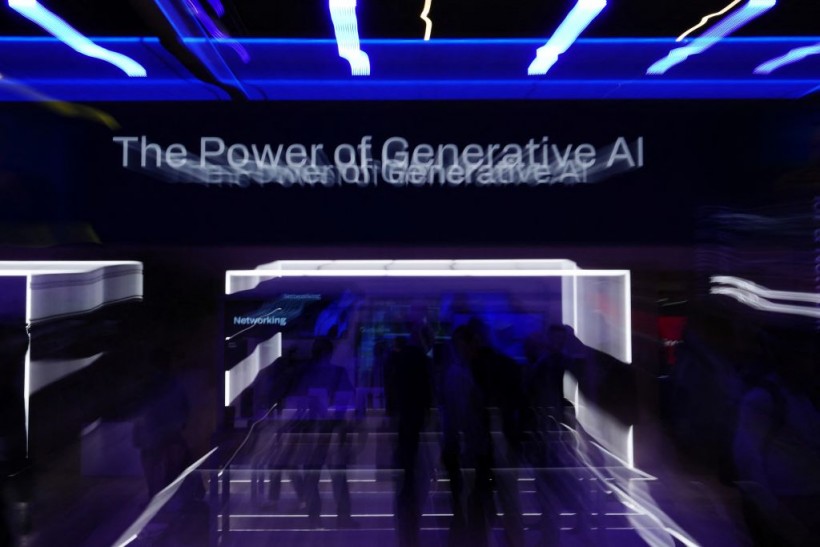

(Photo: PAU BARRENA/AFP via Getty Images) Visitors walk under a display reading "The Power of Generative AI" as they visit the stand of American multinational corporation Qualcomm during the Mobile World Congress (MWC), the telecom industry's biggest annual gathering, in Barcelona on February 27, 2024.

The signed pledge ensures that these child safety guidelines are used throughout the machine learning (ML)/AI lifecycle, starting with the earliest phases of development, deployment, and maintenance.

The paper states this because every stage of the process offers an opportunity to put child safety first, regardless of the data being used.

Deepfakes and AI-generated CSAM have gained significant attention in Congress and other forums, with accounts of adolescent girls being sexually assaulted at school by AI-generated, depicting their likenesses.

Read Also: AI Training Data Contains Child Sexual Abuse Images, Discovery Points to LAION-5B

Deepfake Searches

When searching for terms like "fake nudes" on Microsoft's Bing and specific female celebrities along with the term "deepfakes," NBC News previously reported that sexually explicit deepfakes featuring real children's faces were among the top results.

Additionally, sources say it discovered an advertisement campaign for a deepfake app running on Meta platforms in March 2024, offering to "undress" a picture of a 16-year-old actress.

One tenet is the advancement of technology that will enable businesses to identify whether an image was produced using artificial intelligence. Watermarks are one of the many early forms of this technology that may be easily removed. An additional tenet is that AI model training datasets will not contain CSAM.

Surge of CSAM

The new principles come as a recent report from the National Center for Missing & Exploited Children (NCMEC) highlights a disturbing surge in child sexual exploitation online.

The NCMEC's annual CyberTipline report, released mid-April this year, reveals a concerning rise in various forms of abuse, including the dissemination of child sexual abuse material (CSAM) and financial and sexual extortion.

Online reports of child abuse reached a startling 36.2 million in 2023, an increase of more than 12% from the year before. Most tips were related to CSAM content, such as pictures and films.

Still, there was also a startling rise in instances of financial and sexual extortion, in which predators force minors to give obscene photographs or movies in exchange for money.

The center only started tracking AI-generated CSAM in 2023, but it has already received 4,700 reports of this category. This new tendency makes it more difficult for law enforcement to identify actual kid victims and presents serious obstacles.

In the US, it is forbidden to produce and disseminate information that depicts child sexual assault, even stuff produced by artificial intelligence. However, the bulk of reported occurrences originate outside of the US, highlighting how widespread this problem is.

According to reports, more than 90% of CSAM occurrences recorded in 2023 came from outside sources.

Related Article: Pedophiles Use AI to Create Children's Deepfake Nudes for Extortion, Dark Web Discovery Reveals

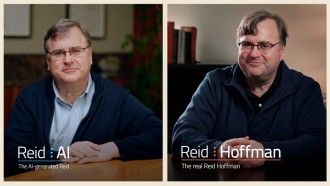

(Photo: Tech Times)