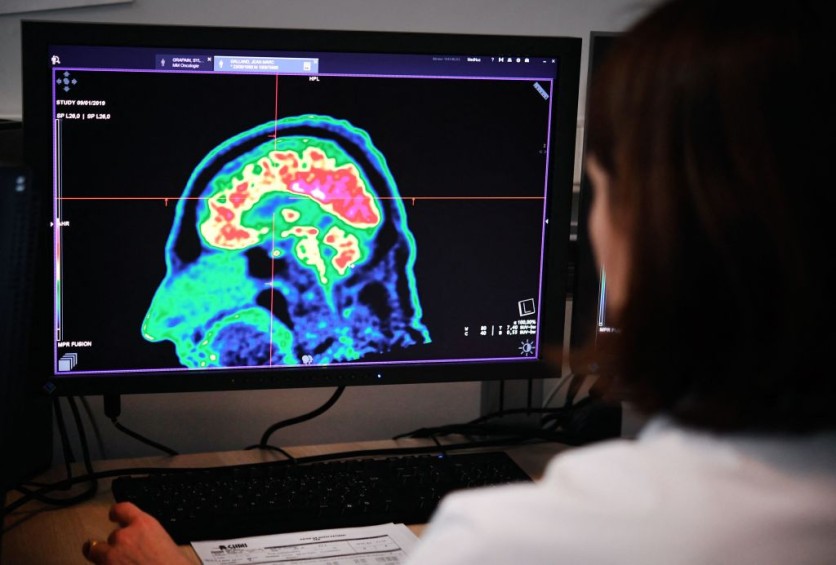

Along with various other companies in the brain-computer interface (BCI) technology, Elon Musk's Neuralink and Bill Gates-backed Synchron have been pioneering the development of brain chips.

These chips are designed to be implanted directly into the brain tissue or within the skull.

Emerging Non-Invasive BCI Technology

These devices are typically implanted directly into the brain or within the skull, raising concerns about potential risks such as physical damage during implantation, cybersecurity threats, long-term brain health effects, and data privacy issues.

However, researchers have showcased noninvasive BCIs in their recent study, offering a promising alternative with benefits including enhanced safety, affordability, scalability, and accessibility for a broader demographic.

A breakthrough in brain-computer interface technology has emerged from Carnegie Mellon University (CMU), offering a non-invasive method for controlling objects using only the power of thought.

Unlike invasive BCIs that require brain implants, this innovative approach allows users to track and manipulate moving objects on a screen through AI-powered neural signals.

Conventional non-invasive brain-computer interfaces face limitations due to their lower accuracy compared to invasive BCIs. These interfaces rely on external sensors that gather data without direct contact with brain tissues, making them susceptible to interference from the user's environment.

However, researchers at CMU propose a solution to this issue using AI-based deep neural networks. These networks represent a significant advancement beyond traditional artificial neural networks employed in tasks like facial and speech recognition.

How EEG Data Fuels Deep Neural Networks

Characterized by its numerous layers and nodes, a deep neural network offers a more sophisticated approach compared to traditional artificial neural networks (ANNs).

This complexity enables deep neural networks to handle intricate tasks efficiently, including processing complex and noisy datasets.

In this study, 28 human participants demonstrated the capability of a deep neural network-powered brain-computer interface to accurately track objects on a screen using only their thoughts.

The participants wore non-invasive BCIs connected to their brains while their brain activity was recorded using electroencephalography (EEG).

The EEG data collected was then utilized to train an AI-driven deep neural network. Remarkably, this network was adept at interpreting the participants' intentions regarding continuously moving objects solely by analyzing the data from the BCI sensors.

Also read : First Human with Neuralink Brain-Chip Implant Fully Recovers, Controls Computer Mouse with Thoughts

The current study's findings suggest that AI-powered, non-invasive BCIs may play a pivotal role in enabling individuals to control external devices without the need for manual input.

This advancement could revolutionize human-technology interaction, offering new avenues for exploring brain function and significantly improving the quality of life for those with amputations or disabilities.

Bin He, a professor of biomedical engineering at CMU and one of the study authors, mentioned ongoing efforts to expand the technology's application, particularly for individuals affected by motor impairments resulting from stroke.

Related Article : MIT Introduces Noninvasive Treatment for 'Chemo Brain' That Involves Lights and Sounds

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.