Kling AI, an AI-powered creative platform, is rolling out a suite of generative AI models designed to streamline how visual and audio content are made, a move that underscores the company's efforts to build a cohesive, end-to-end creative platform.

The company recently unveiled the O1 series—its latest unified multimodal models built to interpret virtually any type of input—text, images, characters, objects, or existing video footage—as a prompt alongside Video 2.6, a model that features native audio generation alongside video creation.

In the rapidly accelerating landscape of generative AI, creators continue to struggle with fragmented workflows: one model for video generation, another for post-production editing, and yet another for image generation or voiceover. Kling AI aims to eliminate this fragmentation with its O1 unified multimodal architecture and Video 2.6 model featuring native audio generation.

Kling Video O1 Model: A Unified Model for Video/Image Editing and Generation

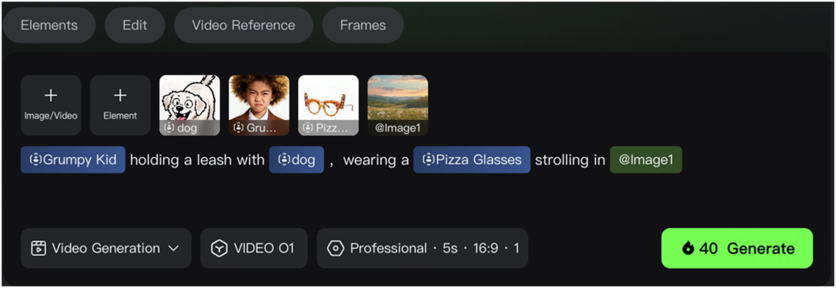

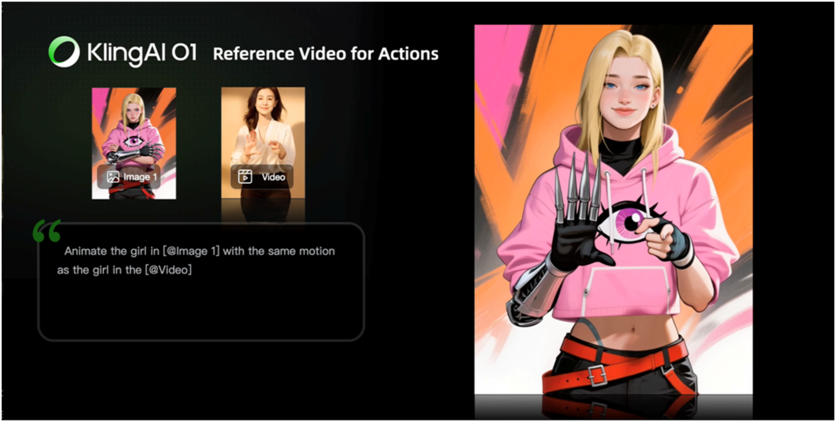

At the heart of the announcement is Video O1, which Kling AI frames as a unified multimodal model built to interpret virtually any type of input—text prompts, images, characters, objects, or existing footage—as a prompt.

It also integrates a wide range of tasks, including reference to video, text-to-video, start & end frames generation, video content editing, modifications, transformations, restyling, and camera extension—all into one unified model. In other words, rather than switching tools depending on whether a user is extending a shot, changing weather conditions in a scene, or restyling footage, creators can do it all within one system.

Currently, the model generates videos between 3 and 10 seconds in length. Kling AI also shared internal blind test results comparing Video O1 to leading models. The company claims that in image-to-video generation tasks, Video O1 achieved a performance win ratio of 247% against Google's Veo 3.1 Fast. Furthermore, in instruction-based video transformation, it achieved a 230% win ratio against Runway's Aleph model. While these are internal metrics, they indicate Kling AI's confidence in competing at the highest tier of the industry.

Kling Image O1: Editing with Control and Precision

Complementing the video model is the Image O1, which focuses on delivering high-fidelity image generation and editing. Its strength lies in a robust knowledge base and multimodal reasoning that allows it to interpret user intent with remarkable accuracy.

A notable feature is its ability to process up to ten reference images simultaneously to rearrange elements, transfer styles, or extract specific features. This enables precise image editing accessible to non-professionals. Users can add, remove, or modify objects and characters while meticulously preserving the original image's style, lighting, and texture. The model is also designed for complex professional workflows, such as generating photorealistic 3D renderings from interior design sketches or adjusting lighting based on directional arrows. Crucially, it maintains subject consistency across multiple generated images, a vital feature for IP character design and comic creation. In its internal testing on multi-image reference tasks, Kling AI claims Image O1 achieved a win rate of 174% against Nano Banana and 123% against Dreamina Image 4.0.

Kling Video 2.6: Native Audio Generation for Richer Storytelling

Kling AI expands beyond visual creation with its newly released Video 2.6 model, which introduces built-in audio generation—a major step toward fully unified multimedia production.

Video 2.6 can now generate video together with dialogues, music, ambient noise, and sound effects in a single workflow. It supports human voices (speaking, singing, rapping) and a wide range of environmental sounds such as ocean waves, crackling fire, or shattering glass.

Creators can specify emotions, tone, rhythm, pacing, and volume, resulting in natural, emotionally aligned speech—from whispers to dramatic shouts. The system also tightly synchronizes audio with visual motion, enabling accurate lip-sync, rhythm-matched actions, and consistent environmental soundscapes.

With native audio, creators can now produce: multi-character conversations, news broadcasting segments, musical performances, narrative shorts with ambient sound, and fully sound-designed advertisements. The model can blend narration, background effects, and natural dialogue simultaneously—for example, generating a commercial with layered rain ambience, a French voiceover, and in-scene character lines.

Industry Impact: How Unified Models May Reshape Creative Production

Kling AI's unified approach arrives amid a race to put next-generation generative AI into real-life applications, as established firms and startups vie to build tools that can interpret complex instructions and deliver professional-grade results.

The potential applications for the O1 models span numerous creative sectors. For independent filmmakers and large studios, the technology could dramatically accelerate post-production. Directors and editors could articulate changes in natural language within a single interface, using reference images to lock in characters and props for seamless consistency across different scenes.

In the fashion industry, the models could reduce the immense cost and logistical challenges of physical photoshoots. Designers could generate models showcasing their clothing from multiple angles against various virtual backgrounds, creating a digital runway. Similarly, e-commerce advertisers could rapidly produce high-quality visual assets or virtual try-on experiences by simply uploading product and model images.

As for the Video 2.6, it currently supports 5-second and 10-second outputs in English and Chinese. Users can create complete audio-visual videos from text and transform static images into dynamic scenes with matching sound. The model reduces production costs for sellers, advertisers, and influencers by enabling fast creation of product demos, social videos, interviews, and commercial-ready content.

With the launch of the O1 series and Video 2.6 Model, Kling AI has firmly planted its flag in the competitive generative AI landscape. We will now be watching closely to see if this unified approach can truly revolutionize content creation as we know it. If it holds up under real-world pressure, Kling AI hasn't just released a new model; it has proposed a new, more intuitive paradigm for human-AI collaboration in creativity.

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.