AI chips have turned into one of the most contested strategic assets in technology, reshaping how companies build, deploy, and scale artificial intelligence systems. As AI models grow more complex and pervasive, the performance and efficiency of AI processors increasingly determine which firms can deliver faster innovation at sustainable cost.

This dynamic has ignited intense "chip wars" among established semiconductor leaders and cloud hyperscalers designing their own custom silicon.

What AI Chips Really Are and Why They Matter

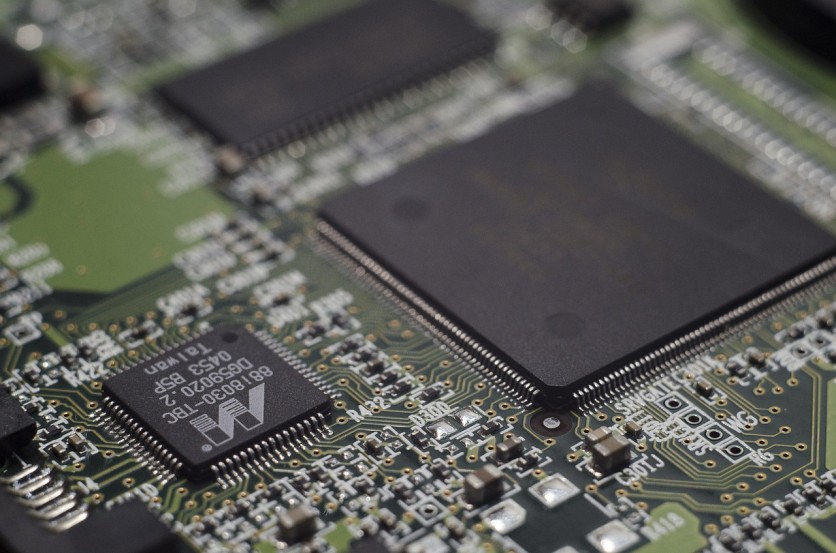

AI chips, often referred to as AI processors or accelerators, are specialized semiconductors engineered to handle the massive parallel computations required for training and running modern AI models.

Unlike general-purpose CPUs that excel at a wide variety of tasks but process instructions sequentially, AI chips are optimized for matrix multiplications, tensor operations, and high-throughput workloads central to deep learning.

Because they are built for these highly parallel operations, AI chips can perform significantly more computations per second while consuming less energy than traditional processors for AI-specific tasks.

This means lower latency for real-time applications, higher throughput for large-scale training jobs, and better performance per watt in data centers. In practice, the choice of AI processor influences everything from a company's AI research velocity to the economics of serving millions of daily users.

As organizations deploy AI into search, recommendation systems, content generation, fraud detection, and industrial automation, compute demand has surged.

In many cases, access to capable AI chips has become a bottleneck, especially during periods of high demand or supply chain strain. As a result, AI processors are no longer just another component; they are a core enabler of digital strategy and competitive differentiation.

Why NVIDIA AI Chips Became the Default

NVIDIA AI chips emerged as the default choice for many AI workloads thanks to a combination of hardware performance and a mature software ecosystem.

While GPUs were originally developed for graphics, NVIDIA invested heavily in adapting them to general-purpose and AI workloads, providing libraries, frameworks, and development tools that made it easier for researchers and engineers to harness their parallel capabilities.

Crucially, NVIDIA's CUDA platform allowed developers to write software that tapped directly into GPU acceleration, creating a powerful moat around its hardware. Over time, this ecosystem attracted academic labs, startups, and large enterprises alike, reinforcing NVIDIA's position as the primary supplier of AI accelerators.

As major deep learning frameworks such as TensorFlow and PyTorch optimized for NVIDIA GPUs, the company's chips became nearly synonymous with AI development.

This dominance has strategic implications. When so many AI workloads depend on NVIDIA AI chips, organizations are exposed to concentrated supplier risk. Pricing, availability, and product roadmap decisions made by a single vendor can influence the economics and pace of AI deployment across entire industries.

That concentration is one of the reasons why many large technology companies have moved to design their own custom silicon as a hedge.

Custom Silicon: Tech Giants' Counterpunch

Custom silicon refers to application-specific integrated circuits (ASICs) and other tailored chips that are designed in-house to suit particular workloads or products. In the context of AI, custom silicon allows companies to co-design hardware and software, aligning AI processors closely with their models, frameworks, and infrastructure.

Large cloud providers and consumer technology companies have strong incentives to pursue this path. They operate enormous fleets of servers and run AI workloads at a scale where even modest gains in performance-per-watt or cost-per-inference translate into substantial savings.

By tuning custom silicon to their own data formats, networking setups, and model architectures, they can reduce overhead, streamline communication, and eliminate features they do not need.

For example, some companies design chips specifically for AI training, while others focus on inference accelerators that power recommendation systems, search ranking, or on-device AI.

This targeted optimization can yield benefits that are difficult to achieve with general-purpose AI chips designed for a broad market. At the same time, custom silicon can reduce dependency on vendors like NVIDIA, even if it does not eliminate the need for third-party AI processors entirely.

However, developing custom silicon is complex and expensive. It requires specialized engineering talent, long design cycles, extensive validation, and close collaboration with manufacturing partners.

Only firms with significant resources, large-scale AI workloads, and long planning horizons can realistically pursue this strategy. This is why custom silicon efforts are concentrated among global tech giants, cloud platforms, and a few well-funded startups.

Read more: Unlock New Samsung Galaxy A16 5G for Just $1: Here's How to Score a Year of Unlimited Service

The Real Chokepoints: Foundries, Tools, and Geopolitics

Even as tech giants invest heavily in custom AI chips, they remain reliant on a small number of advanced semiconductor foundries capable of manufacturing these designs at leading-edge process nodes.

Facilities that can produce cutting-edge AI processors, such as those using advanced lithography, are capital-intensive and geographically concentrated.

This dependence creates new chokepoints. While designing custom silicon may reduce reliance on a single AI chip supplier, it often increases reliance on a limited set of manufacturing partners and specialized equipment vendors.

Any disruption in those supply chains, whether due to geopolitical tensions, export controls, natural disasters, or capacity constraints, can reverberate through the AI ecosystem.

In parallel, governments have begun to view AI chips as strategic assets tied to national security and economic competitiveness. Export controls on advanced AI processors, subsidies for domestic semiconductor manufacturing, and restrictions on equipment sales reflect this shift.

The chip wars, therefore, are not only commercial contests among companies; they are intertwined with broader geopolitical rivalries and industrial policy objectives.

These dynamics underscore that AI chips are more than technical components. They sit at the intersection of innovation, supply-chain resilience, and international relations.

For organizations building AI systems, the landscape of available AI processors is shaped as much by policy decisions and manufacturing capacity as by performance benchmarks.

What the Chip Wars Change for Enterprises and Developers

For enterprises adopting AI, the chip wars introduce both opportunities and complexities.

On one hand, greater competition among AI chips and custom silicon promises better performance, improved energy efficiency, and potentially lower long-term costs. As more players introduce AI processors tailored to different workloads, organizations gain more flexibility to match hardware to their specific needs.

On the other hand, this diversity can fragment the ecosystem. Applications that once relied on a relatively uniform stack of NVIDIA AI chips may now need to support multiple backends across different clouds and on-premises deployments.

This raises questions about portability, vendor lock-in, and the engineering effort required to optimize models for heterogeneous hardware.

Developers face similar trade-offs. New AI chips may offer compelling performance or cost advantages, but they may also require learning new tools, libraries, or programming models.

While many providers strive to support common AI frameworks, subtle differences in memory hierarchies, data formats, and interconnects can still influence performance. As a result, organizations increasingly need to consider hardware-aware optimization as part of their AI strategy.

For end users, the impact of the chip wars is often indirect but meaningful. The availability and cost of AI computers affects how quickly new features can be rolled out, how responsive AI-powered services feel, and whether advanced capabilities can run locally on devices rather than relying solely on the cloud.

Over time, improvements in AI processors are likely to shape experiences in areas such as personalized recommendations, natural language interfaces, real-time translation, and on-device creativity tools.

How AI Chips Shape the Next Phase of AI Adoption

As AI systems move from experimental pilots to critical infrastructure, AI chips have become a central lever for scaling responsibly and sustainably.

The competition between NVIDIA AI chips, alternative accelerators, and in-house custom silicon is set to influence which organizations can deploy AI widely while keeping costs, energy consumption, and latency under control.

In this context, chip wars are less about headline-grabbing rivalries and more about deeper shifts in how computing is structured.

Companies that align their AI strategy with the evolving landscape of AI processors, whether by embracing custom silicon, diversifying suppliers, or optimizing workloads for a mix of hardware, will be better positioned to navigate uncertainty.

Those decisions will help determine who can turn AI from a promising technology into a dependable foundation for products, services, and entire industries.

Frequently Asked Questions

1. How do AI chips differ from traditional server CPUs in real deployments?

AI chips focus on massively parallel math operations, so in practice they handle model training and inference, while CPUs still manage orchestration, networking, storage, and general logic around those AI workloads.

2. Why don't all companies build their own custom silicon for AI?

Designing custom silicon demands huge upfront investment, deep hardware expertise, long lead times, and enough scale to justify the cost, so most organizations rely on existing AI processors instead.

3. Do AI chip choices affect the environmental impact of AI?

Yes. More efficient AI chips can reduce energy use per training run or inference, which lowers data center power consumption and associated emissions for the same level of AI capability.

4. Can smaller startups compete if they can't access the latest NVIDIA AI chips?

They can, but often by using cloud-based AI instances, optimizing smaller models, or targeting specialized niches rather than training the largest frontier models that require top-tier hardware.

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.