Abstract:

AI is a rapidly evolving field that has the potential to impact almost all aspects of life and society. In this article, AI refers to all levels of artificial intelligence, including Narrow AI, General AI, and Super AI. This article examines categories of AI, including Narrow AI, General AI, and Super AI, and provides an overview of the characteristics of these categories, their applications, and what effect AI has on society. This article also looks into areas of research in AI, like Machine Learning (ML), Deep Learning (DL), and Natural Language Processing (NLP), where they fit into the development of Narrow AI, and how substantial is the progress made so far to General AI and Super AI. The article also demonstrates the composition of Generative AI Systems with Large Language models (LLMs) and discuss on how they are integrated to the systems and their use cases in fields like healthcare, and also emphasize on Guiding the behavior of AI. In addition, it demonstrates the opportunities and challenges in implementing Generative AI, and also talks about Responsible AI and the need for Responsible AI. The article also gives examples the potential of Generative AI to revolutionize industries in real-world scenarios and discuss the ways how we can make sure that we take societal implications broadly on Gen AI in the articles. Finally, it emphasizes the need of collaboration among all the participants to make sure that AI continues to move forward while being well-aligned with our values and society's well-being.

AI Taxonomy: Narrow, General, and Super AI

Before getting too specific, we should broadly categorize the theoretical branches of AI based on the strengths of its capabilities (Ragnar)[2]. These broad categories should be seen as continuous scales, not discrete or separate classifications.

Narrow AI: Also known as Weak AI, Narrow AI focuses on executing specific tasks, as seen in voice-activated assistants such as Siri and Alexa and autonomous vehicles' facial recognition algorithms. Despite its proficiency, it's limited by its inability to perform tasks outside its defined scope. This is where all publicly available AI products and services are confined.

General AI: Often referred to as Strong AI, General AI aims to mimic human cognition across various tasks. True General AI remains an ambitious goal in AI evolution, with challenges such as ethical considerations, safety measures, and control mechanisms slowing its development.

Super AI: This speculative concept signifies an AI system surpassing human intelligence in all aspects. Realizing Super AI would necessitate a comprehensive exploration of its potential ethical, societal, and existential impacts.

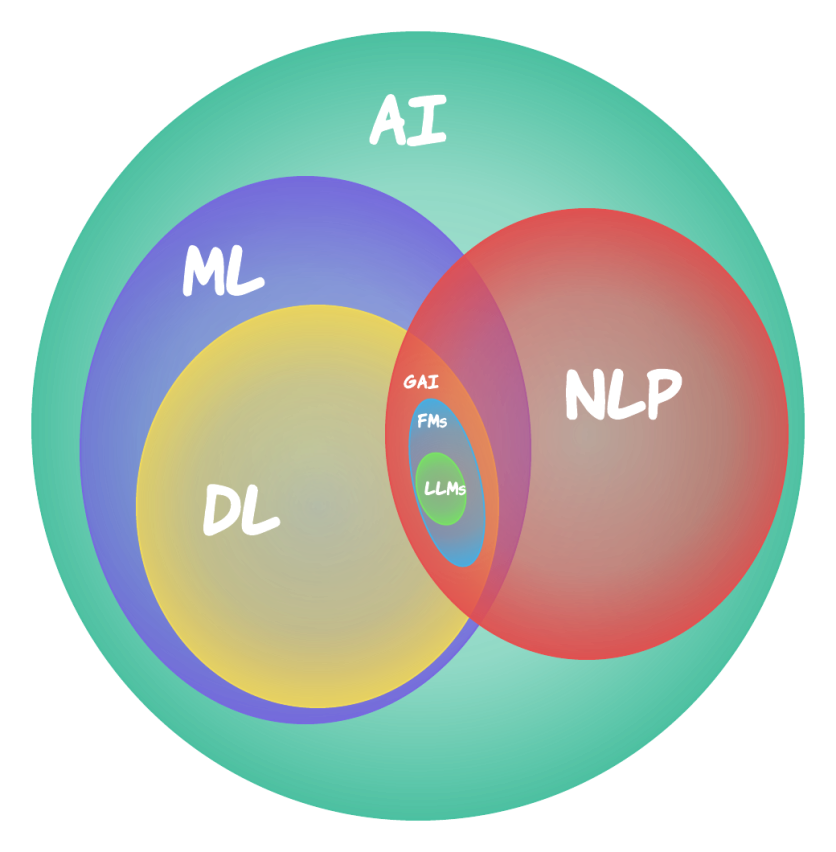

Now that we are familiar with more broad AI categories based on capabilities, we can start to look at the organization of methodologies that are used in present and past AI research. The various methodologies for this type of research are not always strictly separate, but many share techniques and algorithms to attack different applications. While there are many subsets of AI research, Machine learning (ML), Deep learning (DL), and Natural Language Processing (NLP) garner attention because of their diverse range of potential applications.

Delving into the AI Subsets: Machine Learning, Deep Learning, and Natural Language Processing (NLP)

Machine Learning (ML): ML, a broad branch of AI, enables machines to improve task performance using data without explicit programming. It comprises supervised learning for tasks like email classification, unsupervised learning for anomaly detection, and reinforcement learning for game-playing AI agents.

The phrase "without explicit programming" in this context doesn't mean that there is no programming involved at all. It means that once a machine learning algorithm is trained, it can make predictions or decisions based on data it hasn't seen before without needing explicit instructions for every possible scenario.

In traditional programming, a software engineer writes specific instructions that tell a computer what to do in every possible situation. If a new situation arises that wasn't accounted for in the original programming; the software will not be able to handle it effectively.

Conversely, machine learning uses algorithms that "learn" patterns in training data and make predictions or decisions based on those patterns. Once trained, these algorithms can handle new data that they haven't seen before - They don't need specific instructions for each possible input because they have learned general patterns from the training data, which they can apply to new data.

For example, in a spam filtering application (Dada et al.)[3], instead of writing specific rules to identify every possible type of spam email (which is virtually impossible), a machine learning algorithm that learns from examples of spam and non-spam emails can be employed. Once trained, the algorithm can then predict whether a new email it has never seen before is spam or not without needing explicit instructions for each possible email.

Deep Learning (DL): As a subset of ML, DL employs multi-layered neural networks for complex tasks, leading to advancements in computer vision and natural language processing. Notable types include Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Generative Adversarial Networks (GANs), each serving unique purposes.

Natural language Processing (NLP): Natural Language Processing (NLP) is a branch of artificial intelligence (AI) dedicated to facilitating interactions between computers and humans using natural language. It equips machines with the capability to comprehend, decode, and produce human language in a relevant and constructive manner. (Russell et al.) [1]. However, NLP is interconnected with ML and DL.NLP applies ML and DL techniques to teach computers how to understand, interpret, and generate human language.

NLP with Machine Learning: Traditional NLP techniques often involve ML models such as Naive Bayes, Support Vector Machines, and Decision Trees to understand and interpret human language. These models are usually handcrafted and rely on explicit features for classification or prediction tasks.

NLP with Deep Learning: In contrast, modern NLP has moved towards using DL due to its superior ability to understand context and manage high-dimensional data. Deep Learning for NLP, often referred to as neural NLP, has provided more accurate results for tasks like machine translation, sentiment analysis, and speech recognition. In deep learning for NLP, models such as Recurrent Neural Networks (RNNs), Long Short-Term Memory Networks (LSTMs), and newer Transformer-based models like GPT-3/4 and BERT are utilized.

In summary, ML and DL are fundamental to the functioning of NLP, providing the methods by which a system can learn from and make decisions or predictions based on data.

Traversing Towards Generative AI: The Role of Large Language Models

Generative AI is a category of artificial intelligence that employs Natural Language Processing, machine learning, and deep learning methods to produce novel content or data. It is often powered by large, pre-trained models known as foundation models (FMs). The new content generated can range from text to images, videos, and music, all created based on patterns learned from vast amounts of pre-existing data.

These models have been trained to learn patterns, relationships, and structures within the data they have been exposed to, and they use this understanding to generate novel content that mirrors these learned characteristics. The potential applications of Generative AI are vast and diverse, ranging from creating unique visual artwork to inventing new pharmaceutical compounds.

Large Language Models (LLMs): Foundation models include Large Language Models, which are specifically tailored to handle text data. They use NLP to process and understand language, and they are built using machine learning techniques, often involving deep learning architectures. They are trained on vast quantities of text data (often in the range of trillions of words), allowing them to understand and generate human-like text (Wei et al.)[4].

LLMs have the ability to engage in interactive conversations, answer questions, summarize dialogs and documents, and provide recommendations. They can be used in a variety of contexts and industries, including but not limited to creative writing for marketing, document summarization for legal purposes, market research in the financial sector, simulating clinical trials in healthcare, and even writing code for software development.

Large Language Models (LLMs), such as OpenAI's GPT-4, are vital stepping stones on the path to Gen AI. GPT-4 is a substantial multimodal model that can process both image and text inputs and produce text outputs. Though it doesn't outperform humans in many practical situations, it demonstrates human-equivalent results on several professional and scholarly measures. For example (Figure 2), It has successfully completed a mock bar exam, scoring within the top 10% of participants. (OpenAI)[5]. These models employ deep learning techniques and are trained on massive datasets to comprehend, summarize, generate, and predict text - but even more interestingly, we can even ask them to add tones to their responses or show empathy.

![Figure 2( (OpenAI 6[5]):](https://d.techtimes.com/en/full/438151/figure-2-openai-65.png?w=836&f=838d8d4c809a0011e2a9787839b75e6d)

When we say an LLM shows empathy, it can generate text that humans typically associate with empathetic responses. For instance, if a user shares a problem, the model might generate a response like "I'm really sorry to hear that you're going through this." But the model doesn't feel sorry-it's just predicting that this is the kind of response a human might give in a similar situation based on the patterns it has learned. By doing so, they significantly enhance capabilities in natural language processing tasks, providing a glimpse of the potential of Gen AI.

The integration of Generative AI and Large Language Models into products and services is a rapidly growing trend. This has increased the demand for data scientists and engineers who are well-versed in these technologies and can apply them effectively to solve various business use cases.

Prospects and Challenges of Generative AI

The promise of Gen AI is immense. By possessing the capability to learn and reason across different domains, Gen AI could potentially contribute significantly to solving complex global challenges. For instance, in healthcare, Gen AI can be leveraged to create tailored treatment strategies by examining patient information and medical research Literature (Jang et al.)[6].

However, the road to Gen AI is fraught with challenges. One concern is ensuring hallucination, bias, and ethical alignment (Ni et al.)[7]; Gen AI systems must align with human values and not be used for harmful purposes. Control is another issue - managing an AI system that outmatches human intelligence is an uncharted territory. Another worry is job displacement, as an AI system capable of learning and reasoning in various fields might replace numerous roles, resulting in profound societal shifts. The unforeseen consequences of Gen AI are another area of concern, as we can't predict all the ways in which such a powerful technology might impact society.

Responsible AI is a concept that seeks to address these challenges by advocating for the development and use of AI in a manner that is fair, transparent, respects privacy, and ensures safety. This includes rigorous testing of AI systems before deployment, regularly auditing AI systems to ensure they are behaving as intended, and creating mechanisms to hold AI systems and their developers accountable (Chen et al.)[8].

Implications of Generative AI in the Real World

The advent of Gen AI has the potential to usher in a new era of technological advancements that could revolutionize industries, accelerate scientific discoveries, and redefine automation. For example, Gen AI could transform industries such as manufacturing by optimizing production processes or healthcare by improving disease diagnosis and treatment.

However, the adoption and integration of this technology necessitate a thorough consideration of its ethical, legal, and societal implications (Chen et al.)[8] (Bair and Norden)[9]. For instance, who is responsible if a Gen AI system makes a mistake? How do we ensure the privacy of individuals when Gen AI systems have access to vast amounts of data? How do we prevent the misuse of Gen AI by malicious actors? These are just a few of the questions that need to be addressed as we move closer to realizing the promise of Gen AI.

Real-world Application of Large Language Models in Generative AI

In summary, the intersection of Large Language Models (LLMs) and Generative AI shows substantial promise in revolutionizing major sectors such as healthcare. Here are examples of how LLM with healthcare works:

1. Clinical Diagnosis and Treatment Suggestions: LLMs can help improve the accuracy and efficiency of diagnosing diseases and recommending treatments. They can analyze patient symptoms, medical history, and a vast array of scientific literature to provide healthcare professionals with potential diagnoses and treatment options. This can be especially advantageous in intricate scenarios where determining a diagnosis is difficult.

2. Personalized Medicine and Health Plans: Personalized healthcare is a rapidly growing field, and LLMs are well-suited to this kind of application. By analyzing an individual's genetic makeup, lifestyle, and other factors, these models can provide personalized health advice, diet plans, and exercise regimes.

3. Medical Research and Drug Discovery: LLMs can help accelerate the process of medical research and drug discovery. By analyzing large volumes of scientific literature, these models can identify potential links between studies and suggest novel hypotheses. They can also predict the properties of new drugs and their potential uses.

4. Health Data Analysis and Predictive Healthcare: By analyzing large datasets, LLMs can identify trends and patterns that might not be evident to human analysts. This can be used to predict disease outbreaks or identify patients at high risk of certain conditions, enabling preventative measures to be taken.

5. Mental Health Support: LLMs can provide mental health support, offering resources for cognitive-behavioral therapy and mental health assessments. They can also suggest when professional intervention may be needed.

6. Enhancing Patient-Physician Interactions: LLMs can improve communication between patients and physicians by transcribing medical conversations, providing relevant medical information during consultations, and Assisting doctors in staying current with recent medical studies.

7. Health Chatbots and Virtual Health Assistants: AI-powered chatbots can provide round-the-clock medical advice, guide patients through symptom checkers, facilitate the booking of medical appointments, and offer medication and treatment reminders.

8. Telemedicine and Remote Care: With the advent of telemedicine, LLMs can monitor patient health status remotely, recommend self-care based on symptoms and history, and facilitate online consultations with healthcare providers.

Moreover, the impact of LLMs on healthcare professionals is immense. They can enhance the decision-making process, reduce the risk of human error, automate routine tasks, and streamline workflow. LLMs can help healthcare professionals stay updated with the latest medical research and advancements, improve patient engagement and communication, and achieve a better work-life balance. In sum, by complementing human skills with AI capabilities, we can elevate the level of care delivered to patients and improve the working conditions for healthcare professionals.

Understanding the Role of Prompting in LLMs

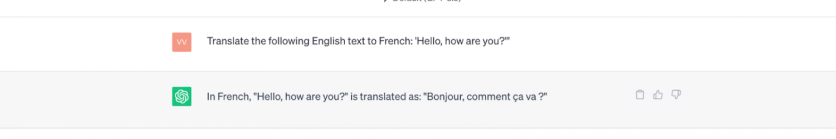

Prompting, or providing a specific input to guide the output of an LLM, is essential to harness the power of these models. For example, when using an LLM to translate English text into French, a prompt can instruct the model to perform the task effectively, thus improving its applicability and utility.

Dissecting the Art of Prompting

1. Zero-Shot Prompting: In this approach, the language model is not given any explicit examples and is expected to infer the nature of the task solely based on the given prompt.

For instance, if we provide a model with the prompt, "Translate the following English text to French: 'Hello, how are you?'" the model should generate the French equivalent of the English sentence. Here, the model has not been trained specifically for translation tasks and has no examples provided at runtime but is expected to translate based on its previous training.

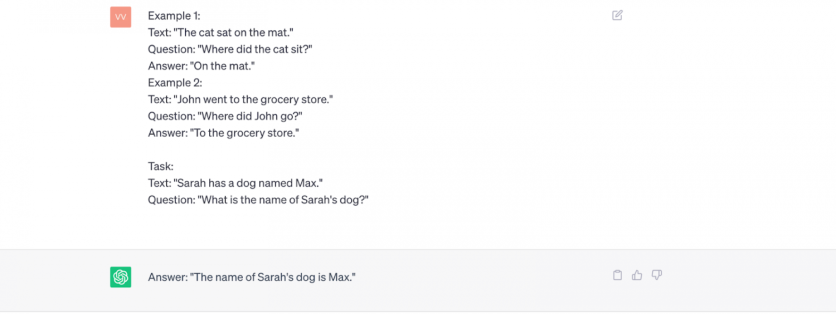

2. Few-Shot Prompting: Few-shot prompting is a type of instruction in which the model is given a few examples of the task at hand along with the input. The aim is to help the model understand the context of the task and perform the operation more effectively.

For instance, if we want a model to answer questions about a specific text passage, we might provide two or three examples of similar questions and their correct answers before posing our question.

One example of few-shot learning could look like this:

Example 1:

Text: "The cat sat on the mat."

Question: "Where did the cat sit?"

Answer: "On the mat."

Example 2:

Text: "John went to the grocery store."

Question: "Where did John go?"

Answer: "To the grocery store."

Task:

Text: "Sarah has a dog named Max."

Question: "What is the name of Sarah's dog?"

Here, the model uses the examples to understand that it needs to provide information from the given text in response to a question. It can then use this pattern to answer the final question.

3. Chain-of-Thought (CoT) Prompting: Chain-of-Thought prompting is a method of interaction where the prompt to the model is based on the previous outputs that it has generated, simulating a back-and-forth conversation. This technique is widely used in chatbot applications or other conversational AI systems. Here, each new prompt is influenced by the prior interactions, thereby making the conversation more dynamic and interactive.

For instance, a CoT prompting sequence might look like this:

* Human: "What's the weather like today?"

* AI: "I'm sorry, I don't have real-time capabilities. Would you like to know how to find the weather using an online service?"

* Human: "Yes, that would be helpful."

* AI: "You can check the weather on websites like Weather.com or use smartphone apps such as the Weather Channel app."

In the above example, each prompt by the human user is built upon the AI's previous response. This form of prompting allows the conversation to be interactive and adaptive based on the AI's responses.

The Significance of Prompting in Generative AI

Prompting offers a means to guide the behavior of Gen AI, enabling humans to effectively leverage its potential. The significance of prompting in Gen AI lies in its ability to control and guide AI systems to generate useful and contextually appropriate outputs. It not only enables better control and guidance of AI responses but also fosters the ethical use of AI (Bozkurt and Sharma) [10]. Prompting is critical for several reasons:

Contextual Understanding and Response: Prompting helps an AI system to better understand the context and desired output for a given task. By using a well-crafted prompt, AI models can generate more useful and accurate responses.

For example, a health-focused AI model given the prompt "What is the recommended treatment for pneumonia?" will understand from the context that it is expected to provide information about the various options for treating pneumonia. However, if the prompt is more specific, such as "What is the recommended antibiotic treatment for bacterial pneumonia in adults?" the AI will generate a more targeted and, thus, likely more useful response, focusing on specific antibiotic regimens suitable for adults.

Task Specification: Prompts specify the task that the AI system is supposed to accomplish. They guide the system's focus and determine the kind of information it needs to process and the kind of response it needs to generate. This is particularly important in complex tasks where the desired output can vary widely based on the input prompt.

As an example, consider the following two prompts given to an AI working in the legal field: "Explain the concept of contract law" versus "Identify potential breaches of contract in the following scenario...". The first prompt instructs the AI to provide a general explanation, while the second prompts it to analyze a specific situation.

Model Flexibility: Language models such as GPT-4 boast great versatility and can undertake various tasks just by altering the input prompt. This makes prompting a key tool for leveraging these models' versatility.

For instance, the same AI model can answer trivia questions, generate a short story, translate text, or summarize a document, all based on the prompts it is given. This demonstrates the model's ability to shift roles simply by interpreting and following different prompts, showcasing an unparalleled level of flexibility.

Controlling Bias and Ethical Considerations: Carefully designed prompts can help mitigate the risk of biased or unethical outputs from the AI system. By specifying the kind of responses that are not acceptable, prompts can help guide the system toward more ethical and fair responses.

For example, an AI model could be prompted to generate descriptions of people, and the prompt could specify that these descriptions should not rely on stereotypes or make assumptions based on race, gender, or other personal attributes. However, it is important to note that prompt design is only one aspect of addressing AI bias and ethics, and it needs to be complemented with other strategies, like bias detection and mitigation in the training data and processes.

Promoting Creativity and Innovation: In some contexts, such as content creation or brainstorming, prompts can be used to guide AI towards more creative and novel outputs. By posing interesting and challenging prompts, users can harness the AI's capability to generate a wide array of ideas. For instance, in a brainstorming session for new product ideas, a prompt like "Design a product that combines the functions of a smartphone, and a coffee maker" could lead the AI to generate a variety of unique and creative ideas that combine elements of both devices in surprising ways. Similarly, in a content creation setting, a prompt like "Write a story set in a world where gravity changes direction every hour" could result in an engaging and original narrative.

Conclusion:

The continual advancements in AI, ML, DL, LLMs, and Gen AI are reshaping our societal landscape. As AI evolves, safeguarding its ethical and responsible development is paramount. Navigating the complex terrain of AI, from Narrow AI's task-specific capabilities to the aspirational General AI and the speculative Super AI requires a careful balance between innovation, ethics, and safety. As we continue exploring the realm of AI, collective efforts from researchers, policymakers, and stakeholders are imperative to ensure these technologies align with the best interests of humanity.

Work Cited

1. Russell, Stuart Jonathan, et al. Artificial Intelligence: A Modern Approach. Prentice Hall, 2009, https://scholar.alaqsa.edu.ps/9195/1/Artificial%20Intelligence%20A%20Modern%20Approach%20%283rd%20Edition%29.pdf%20%28%20PDFDrive%20%29.pdf.

2. Ragnar, Fjelland. "Why general artificial intelligence will not be realized."" Humanities and Social Sciences Communications, vol. 7, no. 1, 2020, pp. 1-9, https://www.nature.com/articles/s41599-020-0494-4#citeas.

3. Dada, and Emmanuel Gbenga. "Machine learning for email spam filtering: review, approaches and open research problems." Heliyon, vol. 5, no. 6, 2019, https://www.cell.com/heliyon/pdf/S2405-8440(18)35340-4.pdf.

4. Wei, Jason, et al. "Emergent abilities of large language models." rXiv preprint arXiv, vol. 2206, no. 07682, 2022, https://doi.org/10.48550/arXiv.2206.07682.

5. OpenAI. "GPT-4 Technical Report." arXiv, vol. 2303, no. 08774v3, 2023, p. 100, https://doi.org/10.48550/arXiv.2303.08774.

6. Jang, Xi, et al. "A large language model for electronic health records." NPJ Digital Medicine, vol. 5, no. 1, 2022, p. 194, https://www.nature.com/articles/s41746-022-00742-2.

7. Ni, Jingwei, et al. "CHATREPORT: Democratizing Sustainability Disclosure Analysis through LLM-based Tools." arXiv preprint arXiv, vol. 2307, no. 15770, p. 2023, https://arxiv.org/pdf/2307.15770.pdf.

8. Chen, Chen, et al. "A pathway towards responsible ai generated content." arXiv preprint arXiv, vol. 2303, no. 01325, 2023, https://doi.org/10.48550/arXiv.2303.01325.

9. Bair, Henry, and Justin Norden. "Large language models and their implications on medical education." Academic Medicine, vol. 98, no. 8, 2023, https://journals.lww.com/academicmedicine/Fulltext/2023/08000/Large_Language_Models_and_Their_Implications_on.6.aspx.

10. Bozkurt, Aras, and Ramesh C Sharma. "Generative AI and prompt engineering: The art of whispering to let the genie out of the algorithmic world." Asian Journal of Distance Education, vol. 18, no. 2, 2023, https://www.researchgate.net/profile/Aras-Bozkurt/publication/372650445_Generative_AI_and_Prompt_Engineering_The_Art_of_Whispering_to_Let_the_Genie_Out_of_the_Algorithmic_World/links/64c1af66c41fb852dd9d8ace/Generative-AI-and-Prompt-Engineering-The-Art-of-Whispering-to-Let-the-Genie-Out-of-the-Algorithmic-World.pdf

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.