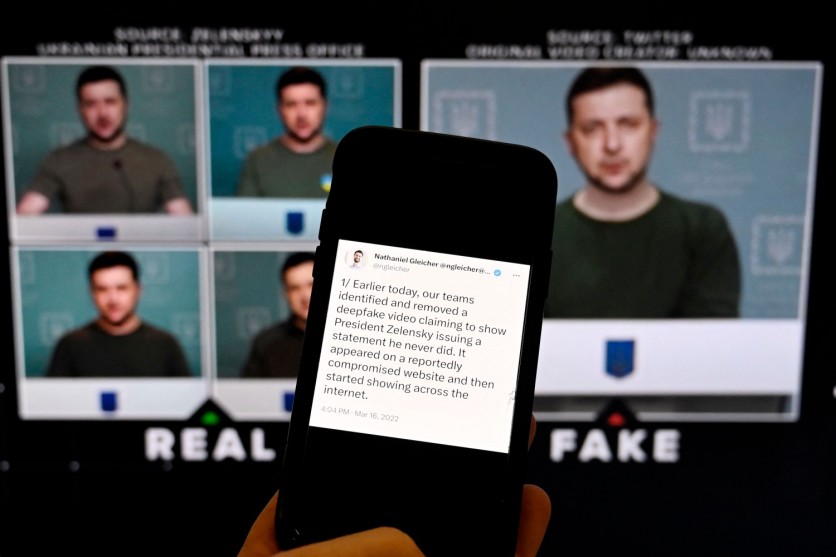

Amid mounting concerns over misinformation and deepfakes ahead of upcoming global elections, Meta has unveiled a robust expansion of its efforts to identify AI-manipulated images.

"The rise of cheap and easy-to-use generative AI tools, the lack of legal guardrails for their deployment, and relaxed content moderation policies at tech companies are creating a perfect misinformation storm," Chad Latz, the Chief Innovation Officer at BCW Global told Tech Times in an interview. "The disinformation ecosystem has eroded confidence in election outcomes combined with acute socio-political polarization.

Just 48.9% of U.S. adults have confidence in the integrity of the presidential election regardless of which candidate wins, according to a fall 2023 survey conducted by cognitive AI company Limbik.

"In the instances where generative AI may be used to support election activity, even with the best of intentions, concerns about hallucinations, accuracy, and model collapse of LLMs (large language models) are a reality and are well documented," Latz said.

Meta's Tools in the Deep Fake Fight

Meta announced that the labels for AI-generated content will be displayed in all the languages supported by each app, though the implementation will be gradual. Nick Clegg, the President of Global Affairs at Meta, stated in a blog post that the company will start applying labels to AI-generated images from external sources "in the coming months" and will persist in addressing this issue "over the next year." He explained that additional time is necessary for collaborating with other AI companies to establish unified technical standards indicating when AI has produced content.

In an interview, Sam Crowther, the CEO of Kasada, predicted that disinformation would be at an all-time high in 2024, especially leading into the US election. Historically, disinformation campaigns were only launched by nation-state threat actors, he said. However, with the increase of bot-related services, anyone can eliminate the staffing needed to spread these campaigns with just a few clicks and a few bucks.

"In addition, these disinformation campaigns will be more convincing this election thanks to advancements in generative AI," Crowther added. "Generative AI allows bots to operate at scale and personalize their messages down to the individual account - instead of posting the same message over and over. This will make disinformation more persuasive and more difficult for social media platforms to detect and remove."

The concern surrounding AI in elections is closely tied to the global rise of misinformation, a trend that has been prominent since at least 2016, said Simon James, Managing Director of Data & AI at Publicis Sapient. The challenge lies in the sheer volume of misleading information generated, making it difficult to keep pace with its output.

"While AI can also be employed to analyze the validity of content and flag potential issues, this dynamic has sparked an ongoing and escalating arms race," he added. "Insurance firms are witnessing an increase in claims for items existing solely as AI images, leading to rejections by different AI models."

Many experts say efforts like the one by Meta to prevent disinformation are doomed to fail. Malicious actors are unlikely to use commercial services since they are likely to be both tracked and blocked and because alternative, open-source AI models and tools are free and widely available, said Kjell Carlsson, Head of AI Strategy at Domino Data Lab.

"Instead, AI companies will ultimately be more successful in preventing this misuse by offering tools for detecting AI-generated content and by lobbying governments to outlaw the use of their tools for malicious purposes," he added.

Carlsson is among those calling for more regulation of the misuse of AI for election interference.

"In the interim, the best steps that can be taken to reduce the risk are by social media platforms, news outlets, and other content providers, who must implement tools and processes to detect, identify, and remove malicious, AI-generated content," he said.

Ideally, all tech companies would agree to an AI bill of rights to set basic standards for responsible AI development, said Tom Guarente, VP of External and Government Affairs at Armis. The effort is complicated because it involves many company departments and the entire data economy.

"However, some steps can be taken - auditing algorithms and data for bias, enabling external oversight to build public trust and empowering employees at every level to question unethical practices," he added. "Additionally, transparent and frequent public communication by both private companies and government agencies regarding observed risk patterns and malicious activities can bolster awareness and responsibility. Promoting AI safety will require acknowledging risks, developing ethical guardrails tailored to election integrity, and pursuing accountability to the public."

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.