Apple's CSAM detection system has caught its first predator amid the controversy surrounding the system.

Federal authorities reported that a doctor from San Francisco had been charged with possessing child pornography.

The doctor is said to have stored the explicit images in his iCloud account.

Apple's CSAM Catches San Francisco Doctor

On Aug. 19, the US Department of Justice announced that the 58-year-old doctor, Andrew Mollick, had 2,000 sexually exploitative images and videos of minors stored in his iCloud account, according to Apple Insider.

Mollick is an oncology specialist connected with several San Francisco Bay Area medical facilities. He is also an associate professor at the UCSF School of Medicine.

As per reports, Mollick uploaded one of the illegal images to a social media app called Kik, according to the unsealed federal complaint reported by KRON 4.

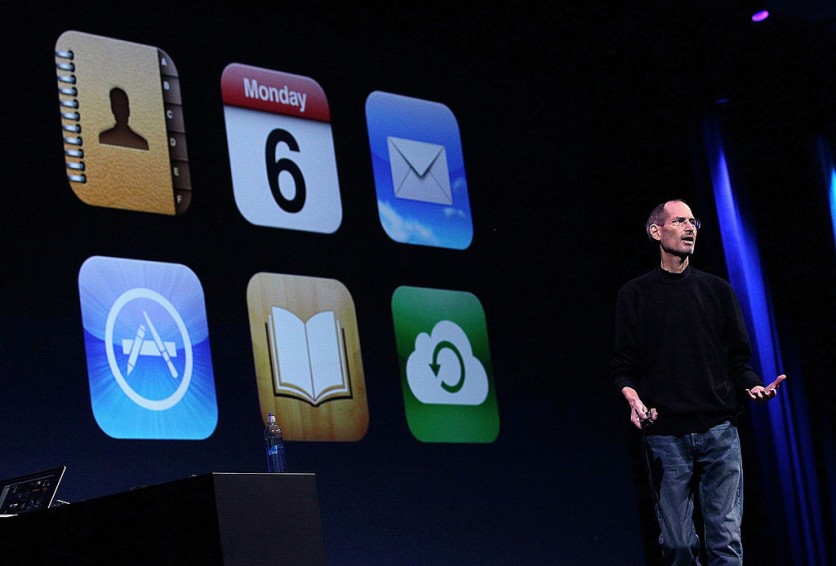

Recently, Apple has announced its plans to launch a system designed to detect child sexual abuse material or CSAM in iCloud this month.

The CSAM system will forward a report to the National Center for Missing and Exploited Children or NCMEC.

The system will rely heavily on cryptographic techniques to ensure the user's privacy even if the system scans the hashes of the photos before storing them on iCloud.

However, it immediately caused controversy among cybersecurity and digital rights activists.

The CSAM system does not actually scan the pictures on a user's iCloud account. Instead, it relies solely on matching the hashes of pictures stored in iCloud to known CSAM hashes provided by several child safety organizations.

A threshold of 30 pieces of CSAM is placed in order to help mitigate any false positives.

Federal documents revealed during a trial against Apple that the tech giant's anti-fraud chief Eric Friedman thought that the company's services were the best platform for distributing CSAM.

Friedman pointed out the fact that Apple has a strong stance on user privacy.

Despite the controversy, Apple is determined to push through with the debut of the CSAM detection system. The tech giant insisted that the platform will still protect the security and privacy of the users.

CSAM is Dangerous Says University Researchers

The tech giant has assured the public numerous times about the safety of the CSAM system, but experts continued to debunk the claim.

According to MacRumors, several respected university researchers are warning the public regarding the technology behind Apple's CSAM, calling it "dangerous."

Even Apple employees are concerned about the detection system.

Jonathan Mayer from Princeton University, and Anunay Kulshrestha, a researcher at Princeton University Center of Information Technology Policy, wrote about the system for The Washington Post.

Both experts outlined their experiences with detection technology.

The university researchers began a project in 2019 to identify CSAM's end-to-end encrypted online services.

The researchers wrote that given their field, they know the value of encryption, which protects user data from third-party access. That is what concerns them about CSAM because it proliferates on encrypted platforms.

Both Kulshrestha and Mayer stated that they want to create a system that online platforms can use to find illegal photos while protecting end-to-end encryption.

The researchers also wrote that they doubted the prospect of a system like CSAM, but they did manage to create it and, in the process, identified a problem.

Amid the protest, Apple continues to believe that the detection system aligns with its privacy values.

This article is owned by Tech Times

Written by Sophie Webster

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.