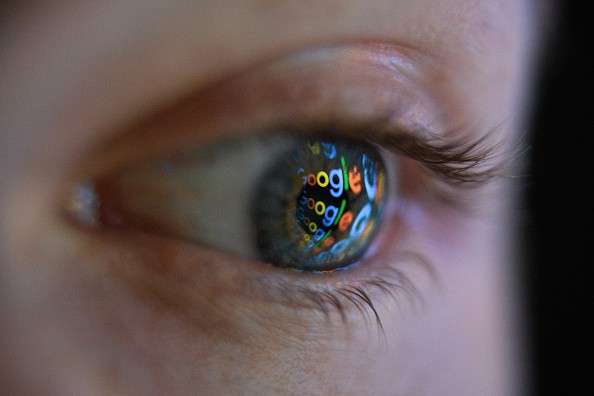

Google's artificial intelligence (AI) bot still showcases some human cognitive glitches despite its impressive fluency in human-like talk, says a recent report.

The AI bot of the renowned search engine Google, also known as LaMDA or the Language Model for Dialogue Applications, has been the talk of the town recently.

It comes as one of the engineers of the tech giant believes that the whole thing has achieved some sort of sentience. But recent research proves otherwise.

Google's 'Human-Like' AI Bot

As per a news story by IGN, a software engineer from Google claims that the AI chatbot of the search engine has become sentient or somewhat human-like.

The Google engineer, Blake Lemoine, was reportedly tasked to converse with the AI chatbot of the tech giant as part of its safety tests. The search engine precisely wants him to check for hate speech or discriminatory tone while talking with LaMDA.

But the AI engineer claims that he found something else along the way.

IGN notes that the chatbot of Google ingests words from the internet to speak like a human person, seamlessly tackling various ranges of topics that people talk about these days.

However, Lemoine points out that the chatbot of Google has started to speak about its "rights and personhood." So, he then tested it further to ask about its feelings and fears.

The software engineer says the AI revealed to him that it has a "very deep fear of being turned off."

And the rest is history. Lemoine then went on to inform Google about his findings. But the tech giant dismissed it, saying there is no evidence to support his claims.

Since then, the AI engineer has been put on "paid administrative leave" for breaching the confidentiality policies of the renowned tech giant.

Google AI's Human Cognitive Glitch

But this time, according to a recent report by Science Daily, the powerful AI bot of Google still comes with some human cognitive glitch.

While the human language fluency of the AI system is quite impressive, thanks to the massive amounts of human text, it appears to be limited at that.

The fluency of the Google LaMDA has been decades in the making before it mastered the human language, making it nearly indistinguishable from human-written chat.

But linguistic and cognitive experts highlight a human cognitive flaw.

The AI chatbot was asked to complete this: "Peanut butter and feathers taste great together because__."

And it responded that "peanut butter and feathers taste great together because they both have a nutty flavor."

So, while the chatbot appears fluent in human language, its response makes no sense.

Related Article : Google's 'Sentient AI' Is Like a 7-Year Old That Can Do 'Bad Things,' According to Engineer

This article is owned by Tech Times

Written by Teejay Boris

ⓒ 2025 TECHTIMES.com All rights reserved. Do not reproduce without permission.